With the rise of Artificial Intelligence (AI), the front lines of the “disinformation wars” have evolved rapidly. In a world where information is being democratized, combating misinformation has never been more challenging. By generating and propagating misleading content – from fake videos to fake news articles – artificial intelligence is changing the face of information.

The Rise of AI-generated Content

Traditional fake news was mostly human-generated and often easy to debunk through simple fact-checking. Today’s AI, particularly models like GPT-4, can create content that appears indistinguishable from human-authored content. Whether it’s generating fake reports, creating synthetic voices, or manufacturing hyper-realistic video footage, AI has introduced a new level of sophistication in disinformation campaigns.

“Deepfakes,” for example, are videos in which AI replaces one person’s likeness and voice with another’s, giving them an eerily realistic appearance. With such technology, a public figure can appear to have said or done something they have never said or done, causing massive public outcries, market manipulation, or international incidents.

Counteracting AI with AI

To combat misinformation resulting from artificial intelligence-generated fake content, technologists are harnessing the power of AI in various domains. In the gaming world, like in ‘Impact of Player Decisions in Baldur’s Gate 3,’ AI-driven models are also used. Videos, audio files, and written content are being trained to detect anomalies that may indicate tampering using deep learning models. While deep fakes look genuine to the human eye, they often have subtle imperfections – lighting, shadows, and audio synchrony are some of the subtle imperfections in deep fakes.

“Provenance tracking,” a proactive measure that uses blockchain or similar technologies to verify the origins and alterations of digital content, can also provide a powerful tool against misinformation when combined with artificial intelligence. Viewers would immediately be able to distinguish the legitimacy of a video clip by comparing it to its original, blockchain-verified version.

The Role of Big Tech

Disinformation wars are being waged by tech giants like Facebook, Google, and Twitter, whose platforms are primary conduits for spreading misleading content, and whose moderation efforts have come under increased scrutiny.

These companies are responding by investing significantly in AI-driven moderation tools. These systems are capable of flagging and sometimes removing content that violates platform guidelines. They are, however, not without controversy. Moderation of content and freedom of speech remain a sensitive issue, and automated systems sometimes make mistakes, suppressing legitimate voices inadvertently.

Education and Awareness

A human-centered approach to digital literacy education is crucial. AI can assist in detecting and mitigating fake news; it remains an essential line of defense. The spread of fake news can be reduced by teaching people to critically assess sources, cross-check information, and be wary of content that elicits strong emotional reactions.

As a result of these changes, media organizations are now offering fact-checking services, transparently correcting mistakes, and emphasizing journalistic integrity.

The Future of the Disinformation Wars

The evolution of AI means that the nature of misinformation will keep changing. Soon, we may see AI-generated holograms or virtual realities used in disinformation campaigns. As technology becomes more advanced, so too must our defense mechanisms.

The onslaught of AI-driven fake news requires collaboration between governments, tech companies, media organizations, and educational institutions.

As part of AI development, ethical considerations are essential. Developers and researchers must be aware of potential misuses and actively work to mitigate them. AI models may be designed with inherent “self-checks” in the future to discourage misuse.

Conclusion

AI presents a technological and societal challenge to combat disinformation. Its threat of sophisticated fake news is amplified by its powerful tools, which can be used to counter this threat. A combination of technology, education, and collaboration can help society rise to meet the challenge head-on, ensuring the value and distinguishability of truth in our information age.

Takeda Fellows at the Forefront of AI in Healthcare

Takeda Fellows at the Forefront of AI in Healthcare  Strategic Design of Neural Network Architectures

Strategic Design of Neural Network Architectures

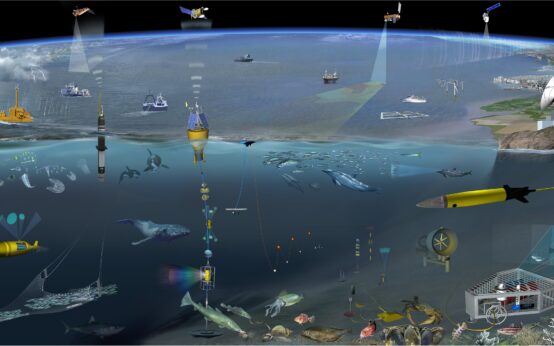

The Future of Ocean Current Exploration

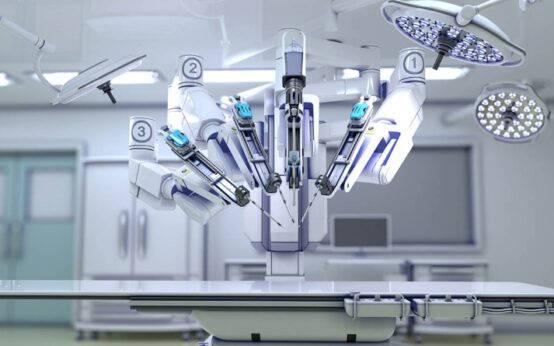

The Future of Ocean Current Exploration  Medical Minds and Machines in Healthcare

Medical Minds and Machines in Healthcare