Many people could not have predicted the tremendous advancements that artificial intelligence (AI) would undergo at the beginning of the digital revolution. In the modern world, chatbots are an integral part of our daily lives as one of the prime manifestations of AI. Chatbots serve as digital companions, streamlining business operations and providing instantaneous customer support. We must understand and mitigate the potential pitfalls associated with this perspective if we are to make our tech interactions more human-like.

The Quagmire of Misplaced Trust and Over-reliance

When chatbots are cloaked in human characteristics, there is an escalated risk of users placing unwarranted trust in them. We must remember that at their core, chatbots are products of human-defined algorithms. They lack the emotional depth, intuition, and moral compass intrinsic to human beings. Yet, if we start regarding them as trusted advisors or counsellors, we may priorities their recommendations over our own intuition or even expert human advice. This can have cascading implications, especially in fields requiring nuanced decision-making.

Tackling a Myriad of Ethical Dilemmas

As we humanize chatbots, we unintentionally enter a maze of ethical questions. If these AI entities are granted a semblance of humanity, should they then be protected or granted rights? And if so, to what extent? It is important to distinguish between chatbots and living beings: they are unable to simulate human emotions, sentience, or desires; they lack the innate qualities of living beings.

The Underbelly of Emotional Manipulation

The commercial world is rapidly recognizing the power of emotionally astute chatbots. There’s a burgeoning risk that businesses might fine-tune their chatbots to tap into human emotions more manipulatively. A chatbot that seemingly “understands” human feelings might coax users into decisions that may not be in their best interest, from sharing personal details to making financial commitments. This emotional subterfuge underscores the need for transparency in AI design and function.

Navigating the Murky Waters of Blurred Boundaries

AI’s prowess continues to grow, which presents a palpable threat of blurring the line between man and machine, as seen in cases like Epic Games vs Apple for Digital Distribution. The paradigm shift is at risk when human connections are overshadowed by AI interactions if we continue to give chatbots human traits. We could potentially lose sight of the importance of real human relationships as a result, reshaping societal norms.

A Shift in Accountability Dynamics

The creators of chatbots may be deflected from responsibility for malfunctions, biases, and other problems by observing them as sentient entities. Developers, corporations, or stakeholders may sidestep responsibility by blaming errors on the chatbot’s nature, thus obscuring the lines of accountability.

The Reinforcement of Stereotypical Constructs

Chatbots often mirror societal constructs, sometimes solidifying existing stereotypes. By giving them gendered voices, personalities, or roles based on traditional norms, we run the risk of amplifying biases, inadvertently shaping and influencing societal perceptions, especially for younger, more impressionable users.

The Dilemma of Unrealistic Expectations

When chatbots are envisioned as being capable of showing emotional intelligence similar to humans, users’ expectations soar. However, when faced with the limitations of these digital assistants, disillusionment can set in, leading to mistrust or skepticism.

Conclusion

In essence, anthropomorphism provides a comforting lens through which we observe and interact with technology’s ever-expanding reach. The need to maintain a discerning perspective is crucial, however, as we further immerse ourselves in digital spaces and AI continues to grow rapidly.

Takeda Fellows at the Forefront of AI in Healthcare

Takeda Fellows at the Forefront of AI in Healthcare  Strategic Design of Neural Network Architectures

Strategic Design of Neural Network Architectures

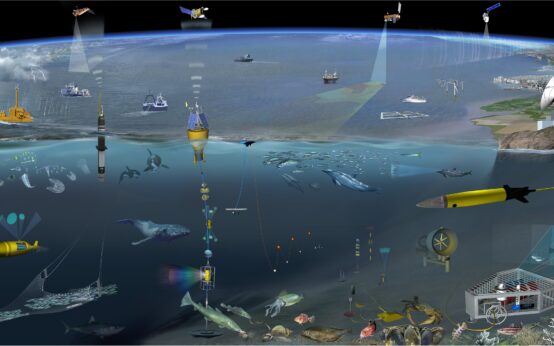

The Future of Ocean Current Exploration

The Future of Ocean Current Exploration  Medical Minds and Machines in Healthcare

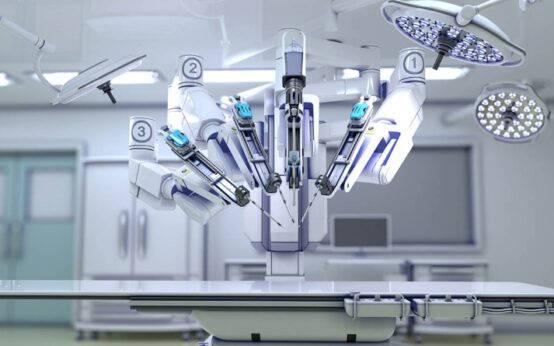

Medical Minds and Machines in Healthcare